Unicode attacks and test cases: IDN and IRI display, normalization and anti-spoofing

16 Dec 2008

Internationalized Resource Identifiers (IRI's) are a new take on the old URI (Uniform Resource Identifier), which through RFC 3986 restricted domain names to a subset of ASCII characters - mainly lower and upper case letters, numbers, and some punctuation. IRI's were forecasted many years ago by Martin Dürst and Michel Suignard, and formalized in RFC 3987. IRI's bring Unicode to the domain name world, allowing for people to register domain names in their native language, rather than being forced to use English.

It was apparent long ago that spoofing attacks would be a huge deal, and we'd need a system to deal with the problem. Anti-spoofing protections are sort of built in to the specifications, with Nameprep, Stringprep and Punycode primarily. Nameprep is actually considered to be a profile of Stringprep. In other words, Stringprep defines all the nitty gritty details available, and Nameprep creates a profile of a subset of those details which should be used when handling IDN's. Whew, let's pause for a deep breath.

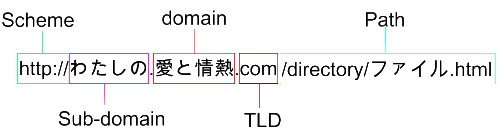

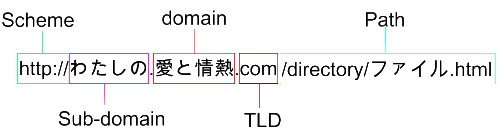

Before getting into it, a quick look at a normal IRI, or traditional URL. The first part indicates the scheme, which could be http://, https://, ftp://, mailto:// among others. This scheme does not support IDN right now. The next part is the subdomain label. It supports Unicode and IDN along with the next label, the domain name. Each of these labels are handled separately, meaning you can have Unicode in one label, but only ASCII in the other. In that case, only the label with Unicode will be processed with Nameprep and Punycode. The next label is the TLD, or top-level domain. These don't support IDN yet, however, most browsers will parse them as if they did. The last part is the path, which can be a combination of Unicode and ASCII, and should be treated as UTF-8 in most all situations. The path does not have the same requirements as IDN, it's completely separate just as the scheme.

[caption id="attachment_342" align="alignnone" width="500" caption="IDN domain name, URI scheme, and path"] [/caption]

[/caption]

Punycode

Punycode provides the encoding mechanism for representing a domain name with non-ASCII characters. So once you have your cool Unicode domain name like www.ҀѺѺ.com, Punycode can make it DNS ready by converting it to all ASCII characters to look like www.XN--E3AAQ.com. I'm not using very good examples, because they both look bad, but Punycode in particular looks hideous. But, it helps user's distinguish between a spoofed domain name like www.microsоft.com which in Punycode looks like http://www.xn--microsft-sbh.com/.

Nameprep

Wait a second, what about Nameprep? This specification requires normalization form KC be applied to IDN's. Normalization form KC performs a compatibility decomposition, followed by canonical composition. If that sounds confusing, read the spec and see just how confusing it can be! The reason I say Nameprep sort of provides anti-spoofing protection against homograph attacks, is because the normalization reduces some characters to their compatibility equivalents. For example, the Latin full-width character 'W' (FF37) looks a lot like the ASCII 'W' (0057). By normalizing the string with form KC, the full-width character is mapped down to its ASCII equivalent. This process reduces the chance of a spoof attack working for a large set of confusables.

The IETF defines IDN in RFCs 3490, 3491, 3492 and 3454, and and bases IDN on Unicode 3.2. This means that changes to the Unicode spec, currently at 5.1, will take a long time to get applied to most software that deals with IDN's. Searching for differences between Unicode 3.2 and 5.1 or the most current spec are sure to yield some interesting test cases.

TLD Whitelisting

After all this, it's not enough to protect the innocent. Some registrar's have designed policies to prohibit or specially deal with lookalike characters for the TLD's they represent. This is an approach, but are we now relying on this distributed network of trust and scattered policy? Seems that's part of the strategy with several browsers.

Firefox

maintains a TLD whitelist. That means Firefox will display IDN domain names in their pure Unicode form for trusted TLD's, rather than convert them to Punycode in the display and URL bar. You can get at this configuration through about:config and going to network.IDN.

Safari also maintains a whitelist of TLD's although I don't know how to find this information. Opera makes their whitelist configurable by going to the opera:config#Network|IDNAWhiteList URL in your browser. I believe .com, .net, and .org were on this list, at least they were in mine until I clicked 'default' which reset the list.

Internet Explorer does not implement a TLD whitelist that I'm aware of, but it does support limited mixed-scripts within domain labels.

Browser testing by W3 verifies this, and also documents the behaviors of each browser. There are many differences across browsers of course, and Opera mostly seems to have several inconsistencies within its own operation.

IDN Testing

IDN testing and research has been going on for a while, some good resources:

Although these resources have their own IDN testing pages, I made one of my own. Mainly to test some characters I was interested in, and also some from the list of stringprep prohibited.

Test Cases:

http://www.lookout.net/test-cases/idn-and-iri-spoofing-tests/

It was apparent long ago that spoofing attacks would be a huge deal, and we'd need a system to deal with the problem. Anti-spoofing protections are sort of built in to the specifications, with Nameprep, Stringprep and Punycode primarily. Nameprep is actually considered to be a profile of Stringprep. In other words, Stringprep defines all the nitty gritty details available, and Nameprep creates a profile of a subset of those details which should be used when handling IDN's. Whew, let's pause for a deep breath.

Before getting into it, a quick look at a normal IRI, or traditional URL. The first part indicates the scheme, which could be http://, https://, ftp://, mailto:// among others. This scheme does not support IDN right now. The next part is the subdomain label. It supports Unicode and IDN along with the next label, the domain name. Each of these labels are handled separately, meaning you can have Unicode in one label, but only ASCII in the other. In that case, only the label with Unicode will be processed with Nameprep and Punycode. The next label is the TLD, or top-level domain. These don't support IDN yet, however, most browsers will parse them as if they did. The last part is the path, which can be a combination of Unicode and ASCII, and should be treated as UTF-8 in most all situations. The path does not have the same requirements as IDN, it's completely separate just as the scheme.

[caption id="attachment_342" align="alignnone" width="500" caption="IDN domain name, URI scheme, and path"]

[/caption]

[/caption]Punycode

Punycode provides the encoding mechanism for representing a domain name with non-ASCII characters. So once you have your cool Unicode domain name like www.ҀѺѺ.com, Punycode can make it DNS ready by converting it to all ASCII characters to look like www.XN--E3AAQ.com. I'm not using very good examples, because they both look bad, but Punycode in particular looks hideous. But, it helps user's distinguish between a spoofed domain name like www.microsоft.com which in Punycode looks like http://www.xn--microsft-sbh.com/.

Nameprep

Wait a second, what about Nameprep? This specification requires normalization form KC be applied to IDN's. Normalization form KC performs a compatibility decomposition, followed by canonical composition. If that sounds confusing, read the spec and see just how confusing it can be! The reason I say Nameprep sort of provides anti-spoofing protection against homograph attacks, is because the normalization reduces some characters to their compatibility equivalents. For example, the Latin full-width character 'W' (FF37) looks a lot like the ASCII 'W' (0057). By normalizing the string with form KC, the full-width character is mapped down to its ASCII equivalent. This process reduces the chance of a spoof attack working for a large set of confusables.

The IETF defines IDN in RFCs 3490, 3491, 3492 and 3454, and and bases IDN on Unicode 3.2. This means that changes to the Unicode spec, currently at 5.1, will take a long time to get applied to most software that deals with IDN's. Searching for differences between Unicode 3.2 and 5.1 or the most current spec are sure to yield some interesting test cases.

TLD Whitelisting

After all this, it's not enough to protect the innocent. Some registrar's have designed policies to prohibit or specially deal with lookalike characters for the TLD's they represent. This is an approach, but are we now relying on this distributed network of trust and scattered policy? Seems that's part of the strategy with several browsers.

Firefox

maintains a TLD whitelist. That means Firefox will display IDN domain names in their pure Unicode form for trusted TLD's, rather than convert them to Punycode in the display and URL bar. You can get at this configuration through about:config and going to network.IDN.

Safari also maintains a whitelist of TLD's although I don't know how to find this information. Opera makes their whitelist configurable by going to the opera:config#Network|IDNAWhiteList URL in your browser. I believe .com, .net, and .org were on this list, at least they were in mine until I clicked 'default' which reset the list.

Internet Explorer does not implement a TLD whitelist that I'm aware of, but it does support limited mixed-scripts within domain labels.

Browser testing by W3 verifies this, and also documents the behaviors of each browser. There are many differences across browsers of course, and Opera mostly seems to have several inconsistencies within its own operation.

IDN Testing

IDN testing and research has been going on for a while, some good resources:

Although these resources have their own IDN testing pages, I made one of my own. Mainly to test some characters I was interested in, and also some from the list of stringprep prohibited.

Test Cases:

http://www.lookout.net/test-cases/idn-and-iri-spoofing-tests/

Eric Lawrence

2008-12-16T16:02:54.000Z

Correct, IE uses a different approach for mitigating homograph attacks, as described in the blog post you linked to.